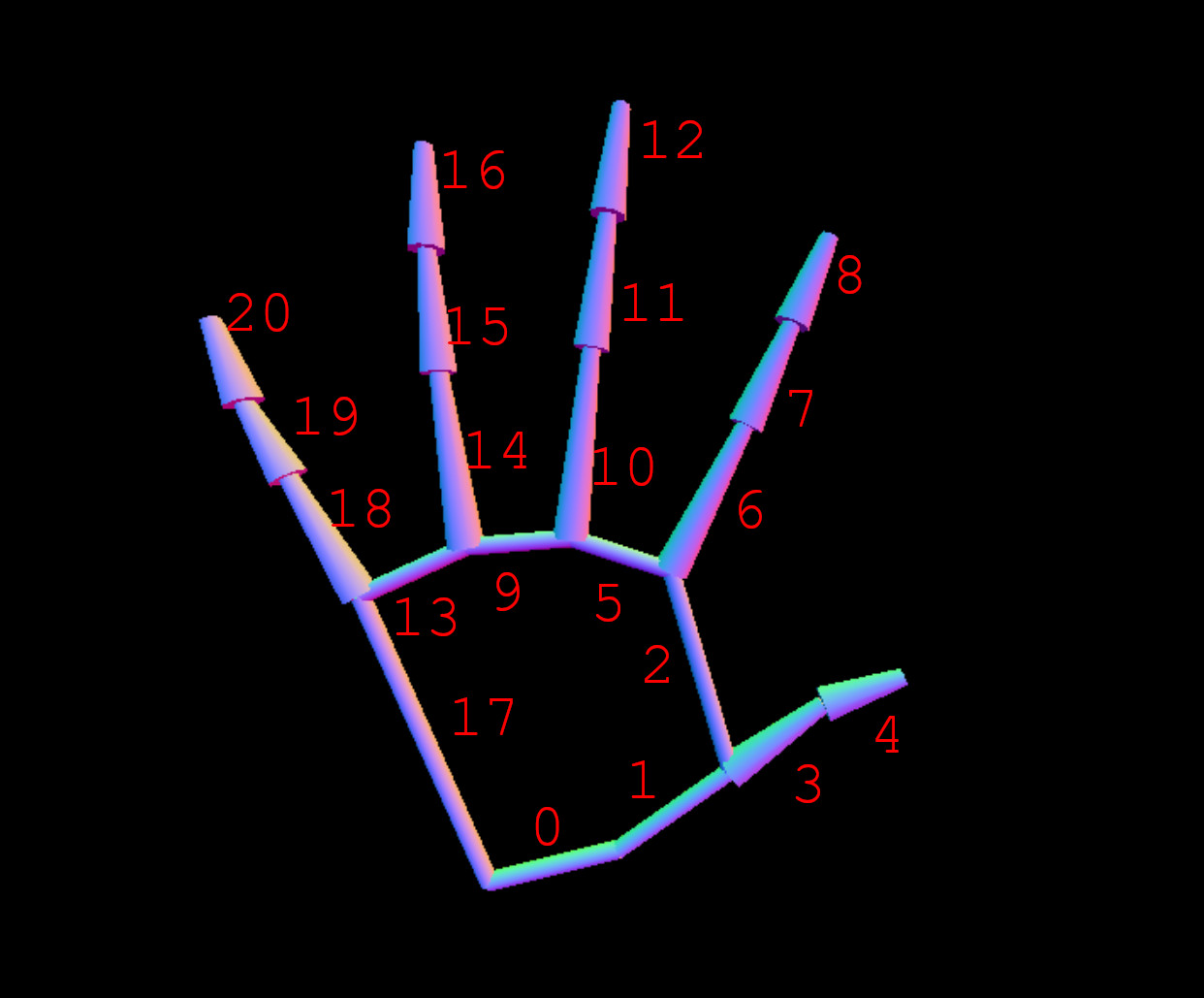

# Model: Handpose

- Powered by TensorFlow's Handpose

- Debugger remixed from @LingDong-'s

handpose-facemesh-demoson GitHub

- 🖐 21 3D hand landmarks

- 1️⃣ Only one hand at a time is supported

- 🧰 Includes THREE r124, TensorFlow 2.1

This model includes a fingertip raycaster, center of palm object, and a minimal THREE environment which doubles as a basic debugger for your project.

# Usage

# With defaults

handsfree = new Handsfree({handpose: true})

handsfree.start()

# With config

handsfree = new Handsfree({

handpose: {

enabled: true,

// The backend to use: 'webgl' or 'wasm'

// 🚨 Currently only webgl is supported

backend: 'webgl',

// How many frames to go without running the bounding box detector.

// Set to a lower value if you want a safety net in case the mesh detector produces consistently flawed predictions.

maxContinuousChecks: Infinity,

// Threshold for discarding a prediction

detectionConfidence: 0.8,

// A float representing the threshold for deciding whether boxes overlap too much in non-maximum suppression. Must be between [0, 1]

iouThreshold: 0.3,

// A threshold for deciding when to remove boxes based on score in non-maximum suppression.

scoreThreshold: 0.75

}

})

# Data

// Get the [x, y, z] of various landmarks

// Thumb tip

handsfree.data.handpose.landmarks[4]

// Index fingertip

handsfree.data.handpose.landmarks[8]

// Normalized landmark values from [0 - 1] for the x and y

// The z isn't really depth but "units" away from the camera so those aren't normalized

handsfree.data.handpose.normalized[0]

// How confident the model is that a hand is in view [0 - 1]

handsfree.data.handpose.handInViewConfidence

// The top left and bottom right pixels containing the hand in the iframe

handsfree.data.handpose.boundingBox = {

topLeft: [x, y],

bottomRight: [x, y]

}

// [x, y, z] of various hand landmarks

handsfree.data.handpose.annotations: {

thumb: [...[x, y, z]], // 4 landmarks

indexFinger: [...[x, y, z]], // 4 landmarks

middleFinger: [...[x, y, z]], // 4 landmarks

ringFinger: [...[x, y, z]], // 4 landmarks

pinkyFinger: [...[x, y, z]], // 4 landmarks

palmBase: [[x, y, z]], // 1 landmarks

}

# Examples of accessing the data

handsfree = new Handsfree({handpose: true})

handsfree.start()

// From anywhere

handsfree.data.handpose.landmarks

// From inside a plugin

handsfree.use('logger', data => {

if (!data.handpose) return

console.log(data.handpose.boundingBox)

})

// From an event

document.addEventListener('handsfree-data', event => {

const data = event.detail

if (!data.handpose) return

console.log(data.handpose.annotations.indexFinger)

})

# Three.js Properties

The following helper Three.js properties are also available:

// A THREE Arrow object protuding from the index finger

// - You can use this to calculate pointing vectors

handsfree.model.handpose.three.arrow

// The THREE camera

handsfree.model.handpose.three.camera

// An additional mesh that is positioned at the center of the palm

// - This is where we raycast the Hand Pointer from

handsfree.model.handpose.three.centerPalmObj

// The meshes representing each skeleton joint

// - You can tap into the rotation to calculate pointing vectors for each fingertip

handsfree.model.handpose.three.meshes[]

// A reusable THREE raycaster

// @see https://threejs.org/docs/#api/en/core/Raycaster

handsfree.model.handpose.three.raycaster

// The THREE scene and renderer used to hold the hand model

handsfree.model.handpose.three.renderer

handsfree.model.handpose.three.scene

// The screen object. The Hand Pointer raycasts from the centerPalmObj

// onto this screen object. The point of intersection is then mapped to

// the device screen to position the pointer

handsfree.model.handpose.three.screen

# Examples

Handsfree.js now has #TensorFlow #Handpose:

— Oz Ramos (@MIDIBlocks) January 7, 2021

- 21 3D landmarks (only 1 hand for now)

- A fingertip raycaster for pointing at things

- No plugins yet but will have some basic gestures added soon

Basic documentation + demos: https://t.co/LH0qYbOG4G#MadeWithTFJS #WebXR pic.twitter.com/qT0lWgtN7P

I made Handsfree Jenga 🧱👌

— Oz Ramos (@MIDIBlocks) December 4, 2020

It's kinda buggy still but this demos how to use Hand Pointers to interact w/ physics in a Three.js scene #MadeWithTFJS

Try it: https://t.co/ACuamUga0r

Handsfree.js hook: https://t.co/UybmDLnVFE

Docs: https://t.co/WpNd3kLp8r pic.twitter.com/bEdi5Gm5z7

Some progress on getting 2 hands tracked with #Handpose!

— Oz Ramos (@MIDIBlocks) December 10, 2020

Since it can't detect 2 hands yet what I do is:

- Check for a hand

- Draw a rectangle over the hand

- Check for a hand again...since 1st hand is covered it'll now detect 2nd

Kinda slow still but getting there 🖐👁👄👁🖐 pic.twitter.com/xcdo14Txwn